As previously mentioned, I planned to spend yesterday rewriting Deelang‘s parser with the aim of fixing some long-term issues and revisiting some design decisions that get in the way of the DEX compiler. As it turned out, I didn’t have as much time as I wanted to spend on this (as usual!) but I did get some time to play with it, and it turned out that my decision to rewrite much of the parser was, well, somewhat premature.

The main aim of the rewrite was to change the way the AST is generated, to remove the imaginary CHAIN nodes that proliferate the current AST. CHAIN nodes are used as the LHS in field accesses and method calls, as well as one or two other places, and denote that the receiver is the result of the previous operation. They work great when compiling for a stack machine (such as the JVM or the original Dee VM) – they translate simply as “pop the top object from the stack” – but are less intuitive when compiling for a register architecture, such as Dalvik.

Chain nodes and stack machines

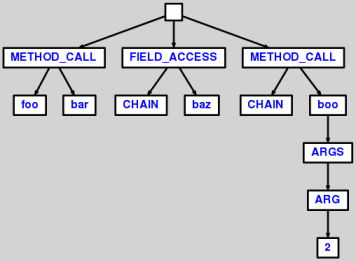

Let’s look at an example. Here’s the AST generated by the current parser for the code foo.bar().baz.boo(2):

This clearly shows the CHAIN nodes, and how they relate to the previous expression in the tree. If you run this tree through your mental interpreter you’ll see that, when the compiler encounters a CHAIN node it only needs to pop the top stack value to find the receiver.

Aside: In actual fact, it almost never needs to actually pop the receiver, as the current stack-top is usually the right place for the generating opcode to find it, but that’s not relevant for our discussion today.

So, CHAIN works great for stack machines. It’s intuitive, and more importantly it’s easy (read: efficient) to compile.

The Dalvik situation

Android’s Dalvik virtual machine, as you may be aware, is not a “traditional” JVM-like stack machine, but instead uses a register architecture. The motivation for this design is primarily (according to Google) efficiency and flexibility on mobile platforms (there’s plenty more to read on this subject – a quick web search will keep you occupied for a whole afternoon if you’re interested). The relative merits and demerits of registers over stack are another post entirely – for our purposes we need only to acknowledge that Dalvik uses registers; that if we want to compile DEX bytecode so must we; and that that’s just the way it is.

CHAIN doesn’t work quite so well in this situation. When we’re tracking registers in the DEX compiler, CHAIN means that we need to keep around a record of the target register of the previous instruction at all times, just in case the next instruction is be chained. It also prevents us doing certain basic optimizations, because we can’t know whether the current instruction is actually unused, or if it’s referenced by a CHAIN on the next instruction. Of course, we could add a lookahead mechanism to take care of this, but register tracking is already a fairly complex beast that really doesn’t need any extra levels of code muddying the waters.

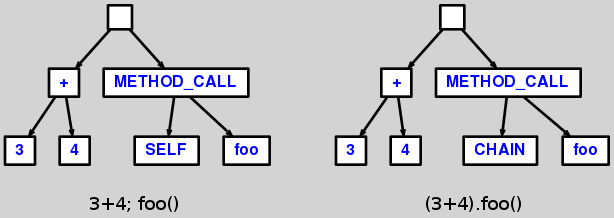

Possibly the simplest example of this is given by the code 3+4; foo() vs. (3+4).foo(). The ASTs for these are shown below:

In the first case, the compiler could optimize away the 3+4 completely, since it’s result is never used. In Deelang this would save two object allocations (for the DeelangIntegers), three method calls (the two constructors, and the __opADD), and a target register. However, without looking ahead to the next instruction there’s no way this can be done – the compiler simply can’t know when processing the 3+4 whether or not the next instruction will CHAIN a method call with the result of the addition.

Aside: This issue also exists with the Dee VM, of course. Since we have more control over runtime optimisation on that platform, it’s less of an issue.

So we should rewrite the parser, right?

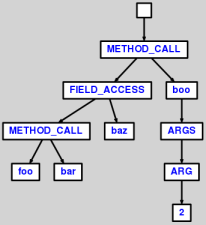

This was my first reaction. As I mentioned previously, doing this was on my to-do list for some time. The ideal version of the first AST, above, for the DEX compiler would be:

Obviously, this could be done by modifying the parser itself, and that’s the road I started down yesterday. I extracted out the CHAIN logic into a new grammar so I could play with it without impacting all the other stuff in the current Deelang grammar, with the plan that eventually I’d merge the new logic back in. I played with various ways of building the AST, and after several attempts with different ways of parsing, I realised that I wasn’t getting anywhere. It took a little more thinking before I realised that I was never going to be happy with changing the parser this way, for a simple reason: it works well as it is, and I could only swap complexity in one compiler for complexity in the other.

Let me expand on that a bit: Deelang isn’t exclusively about the Dex compiler. We still support (and plan to continue supporting) the Dee VM compiler and runtime, and maybe even a JVM compiler one day. Removing CHAIN is counter-intuitive to both these cases. While the DEX compiler has to jump through a few hoops to track registers for chaining, without chaining the situation would be far more complex in the DeeVM compiler, and any future Java bytecode compiler.

This still leaves us unable to make certain optimisations in the DEX compiler. Some further consideration of that situation led me to realise that there’s not actually that much difference between the first AST and the ‘ideal’ AST for the DEX compiler. Really, all I needed to do was walk the tree, keep track of the previous instruction, and when I encountered a CHAIN, simply remove it and replace it with that saved previous instruction. In other words, I could rewrite the AST, rather than rewriting the parser. (Finally, a direct reference to the title of this post!)

Once I’d come to this conclusion, I did what I should have resolved to do in the first place – I cleaned up the current parser grammar a little, and fixed up the bugs I knew were there, but without changing the AST. There was no need to change any tests, and the Dee VM compiler continued to work exactly as before. I then wrote a new, very simple, and very small ASTVisitor implementation that would mutate the tree, bringing chained instructions together under the appropriate tree. The AST above is generated by ANTLR, based on just such a modified tree.

There are still some pros and cons to be considered here – not least that we’re traversing the entire tree twice during the DEX compile. However, this is very inexpensive, since visiting any node simply means stuffing that tree into an instance variable and then visiting the children. Only when a CHAIN is visited does any real work take place, and even then it’s only an array search (with less than 5 elements generally) and a couple of reference updates.

Obviously this will eventually be subject to profiling, and if it turns out to be a bottleneck then it’ll be revisited. But with the amount of work the actual compiler saves by not having to dance around target registers quite so much, and the increased clarity of the code thanks to that, I seriously doubt this will have any negative impact on compiler performance.

The moral of the story

If there is a message to all this, it’s probably that even if you subscribe to the old adage of “plan to throw one away” (which I don’t particularly, at least not in general), it’s important not to be too eager to actually discard your implementation. Play around with it a bit, try a few alternatives on a very limited basis, and you might just find a new perspective on the problem. Seems obvious, right?

It surprised me how easily I forgot it and almost wasted a whole day rewriting something non-trivial that really didn’t need rewriting.

2 thoughts on “Rewriting Vs. Rewriting”